Computation plays a big part of astronomy research at IU, all the way from massive N-body calculations and analysis of very large datasets to our day-to-day desktop manipulations. Fortunately, IU provides the computational resources to make all this possible.

IU’s Carbonate cluster has 72 general-purpose compute nodes, each with 256 GB of RAM, and eight large-memory compute nodes, each with 512 GB of RAM. Carbonate is designed to support data-intensive computing and includes a specialized GPU partition for researchers with applications that require GPUs.

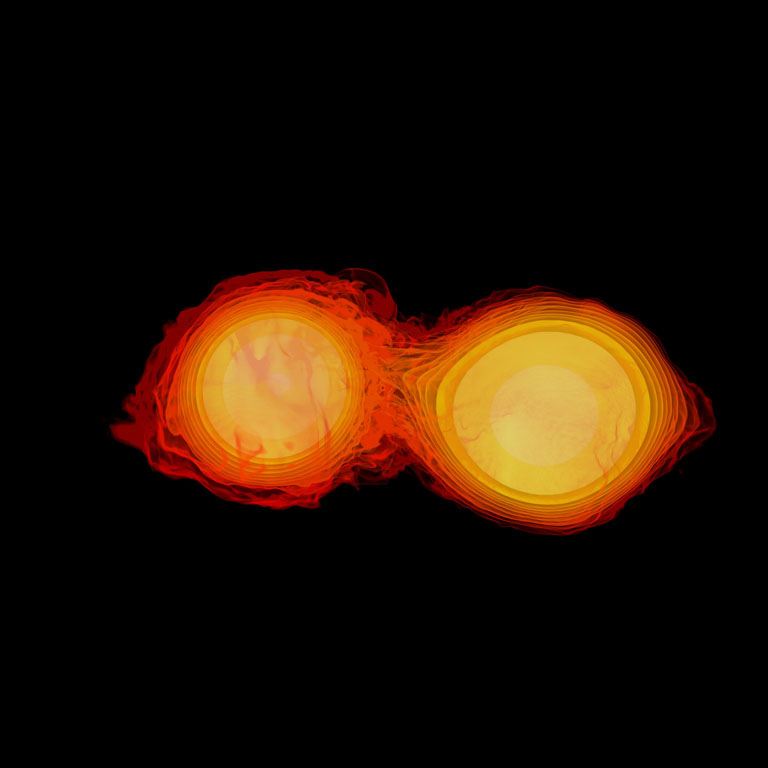

Enrico Vesperini’s research group uses Carbonate for their studies of the formation and dynamical evolution of multiple stellar populations in globular clusters, investigation of the evolution of young clusters in merging galaxies, and study of the evolution of Galactic and extra-galactic globular cluster systems. Three investigators in Vesperini’s group are among the top ten users of Carbonate and they have been assigned their own node full-time to support their research.

Vesperini’s group also uses IU other supercomputers, including Big Red 3, Big Red 200, and Quartz. Big Red 200, which is among the world’s fastest university-owned supercomputers, is an HPE Cray EX supercomputer designed to support scientific and medical research, and advanced research in artificial intelligence, machine learning, and data analytics. Big Red 200 has a theoretical peak performance of nearly 7 petaFLOPS. Big Red 3 is a Cray XC40 supercomputer dedicated to large-scale, compute-intensive applications that can take advantage of the system's extreme processing capability and high-bandwidth network topology.

IU’s high-throughput computing cluster Quartz provides high-capacity processing over extended time for our high-end, data intensive applications. Its 92 compute nodes are each equipped with two 64-core AMD EPYC 7742 2.25 GHz CPUs and 512 GB of RAM, with a peak per-node performance of greater than 4,608 gigaFLOPS.

IU’s large file systems are also critical for our research in astronomy. These file systems support three important data repositories, including the WIYN One Degree Imager (ODI) archive, the Blanco DECam Bulge Survey Archive, and the Kitt Peak FTS spectroscopic archive. IU is also responsible for all pipeline reduction of ODI data and data analysis tools through the ODI Portal, Pipeline and Archive (ODI-PPA). The ODI-PPA contains more than 60 TB of data and nearly 40,000 processed, science ready images from WIYN. For the BDBS archive, IU carried out all photometric measurements over the 200 square degree survey field with measurements of 250 million sources in six filters down to the main sequence turnoff.

See:

-

https://news.iu.edu/live/news/29996-iu-high-performance-computing-resources-facilitate

-

https://news.iu.edu/live/news/30947-researching-1-billion-years-of-cosmic-history-with

Other IU faculty and students take advantage of high performance computing infrastructure through a Research Desktop (RED) application. RED is particularly helpful for students used to a graphical user interface as they transition into a Linux command-line environment most often used for research applications. RED runs on personal devices like a laptop or desktop computer to launch and control a session running on one of more than two dozen dedicated supercomputer nodes, each equipped with either two 8-core or two 12-core Intel Xeon processors and 256 GB of RAM. From the RED GUI, a user can access their home directory space on the supercomputer, run graphical applications remotely without noticeable latency, and transfer data securely between a personal device and a user’s research computing accounts.

The College of Arts

The College of Arts